The start of the fall semester is right around the corner, and with that a whole new year of buggy! I hope you’re ready to hit the ground running, and brimming with ideas to make the sport faster, safer, and more fun!

Applications for Fall 2024 Buggy Enhancement Grants are now open! We have a whopping $12,000 available for this round of funding! This money belongs to you as a member of the buggy community to enhance your enjoyment of the sport. If you have ideas that you think will make buggy more enjoyable for you, your team, and the greater buggy community, we want to hear from you!

The deadline to submit applications is Sunday, September 15th.

Apply Here: https://cmubuggy.org/2024fallgrant

Keep an eye on your news feed for announcements about brainstorming and grant writing sessions. If you would like to get early feedback from a member of the committee to give your proposal the greatest possible chance of receiving funding, please feel free to reach out on the BAA Discord.

Submissions open today and are due by Sunday, September 15th. The Buggy Endowed Fund Committee will meet on 19th to give you feedback and ask questions about your proposals. Applicants will have 1 week to respond to committee feedback before the final vote on September 26th.

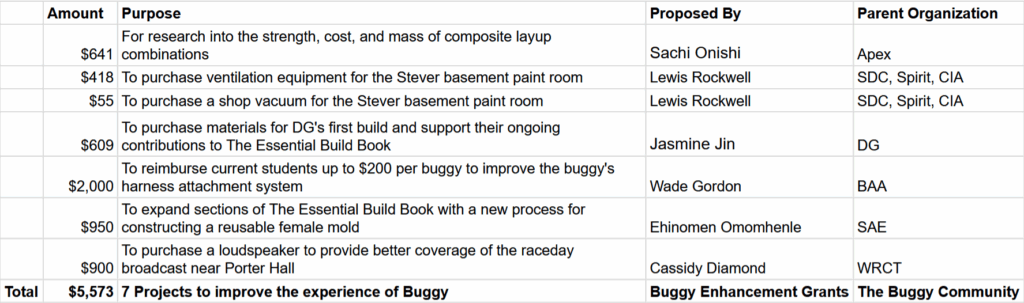

Last year we received 23 grant proposals from 10 different organizations, and funded 11 projects for a total of $5,853.66! Details about these projects can be found here.

Please feel free to take inspiration from these suggestions from the Buggy Endowed Fund Committee:

- Improve the experience of driving a buggy by improving visibility, safety, or the quality of the roads.

- Improve the quality of life for the people that help support buggy around the course with a warming hut, covered storage, or better tools (ie. higher flow leaf blowers).

- Make a new addition to the BAA’s historical data by professionally digitizing old photos or course footage, buying software to upscale low resolution photos and videos, or hiring a professional to capture an event.

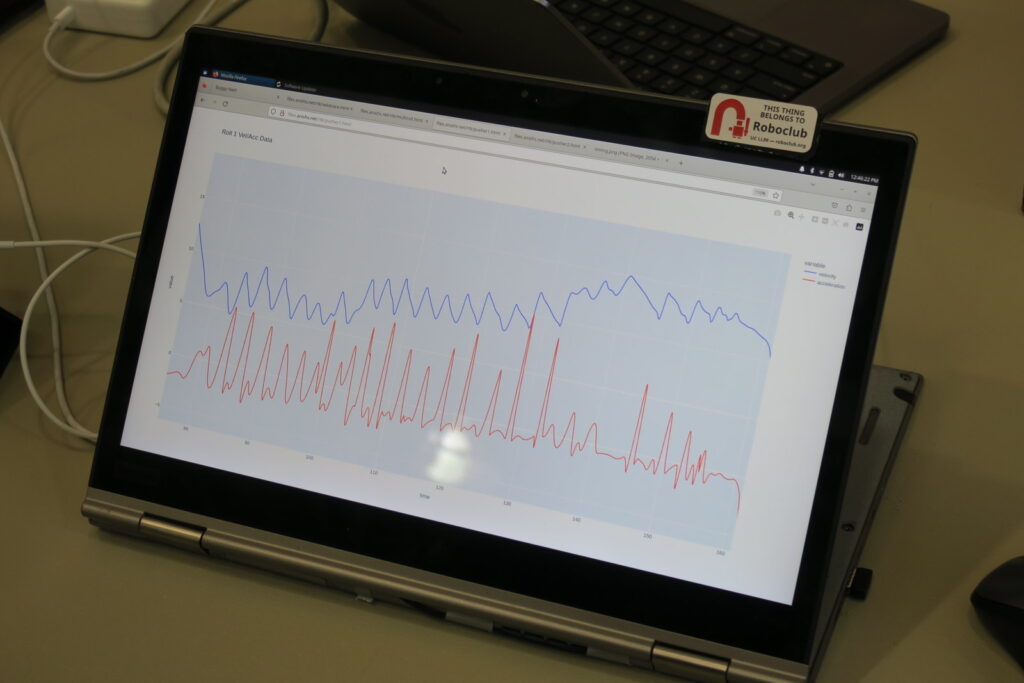

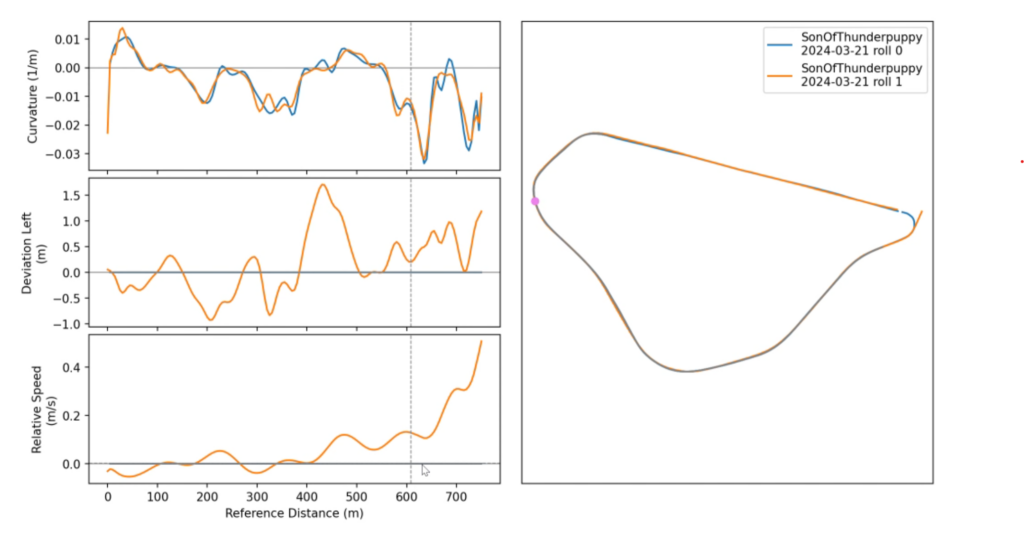

Proposals can even be specific to your team. Please include some details on how the community as a whole will benefit from funding your project. For example, you might share documentation of your process, publish a video, or give a talk summarizing your results. You might build something to collect better data during rolls, test out a new material, or design a wheel mold that can be shared among teams.

Apply Here: https://cmubuggy.org/2024fallgrant

For more information about Buggy Enhancement Grants, including tips from the committee on how to strengthen your grant proposal, and the actual text of previously approved grant applications, please read All About Buggy Enhancement Grants. If you have any comments or suggestions to improve the Buggy Enhancement Grant program, please fill out our feedback survey.